Coding with AI: From Blank Canvas to Working App in a Day

This post documents what it felt like to build software with AI—from a clean slate, minimal tooling, and a curious mindset. The goal isn’t to teach, because I’m not an expert on the topic of Coding with AI. I’ve been coding professionally for years, but working with AI like this is still new territory. These notes capture my experiments, prompts, roadblocks, and small wins along the way.

If you’ve got tips or stories of your own, I’d love to hear them.

The experiment

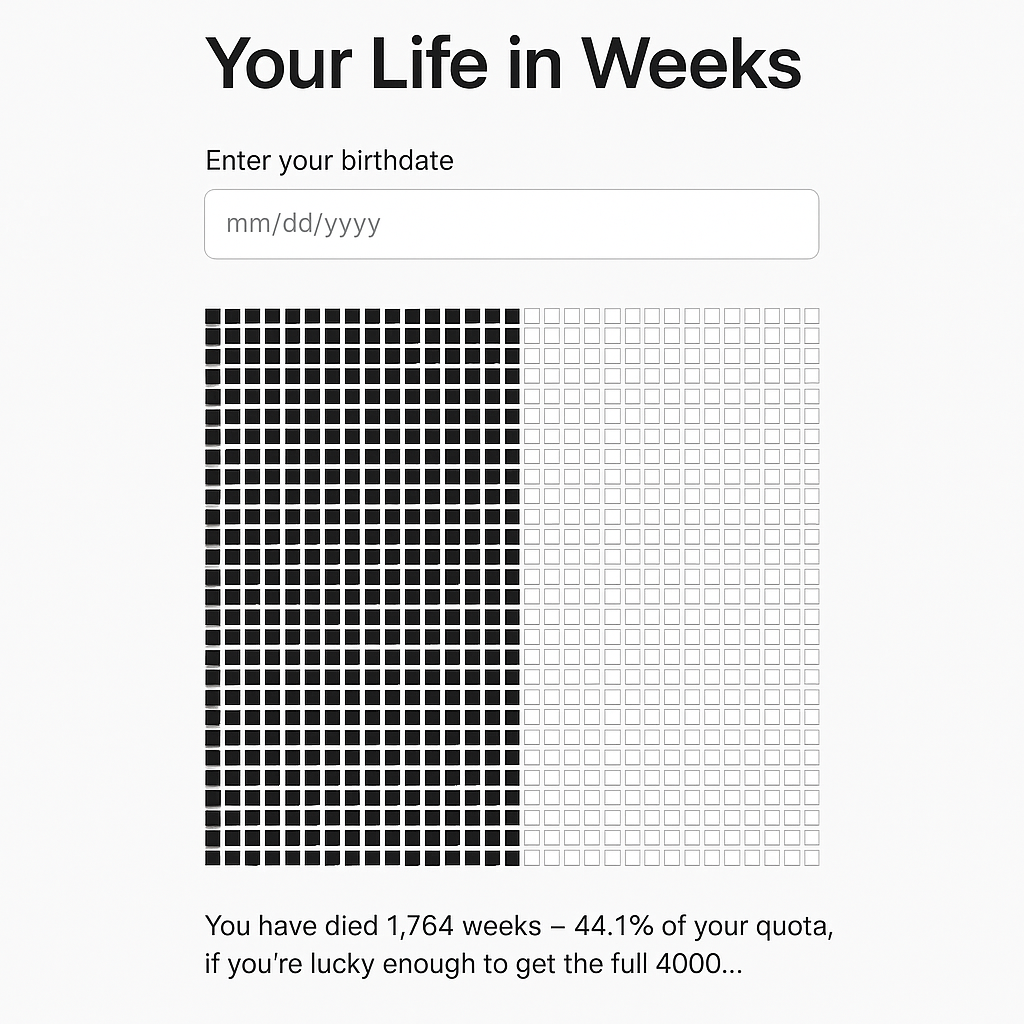

I wanted to build something simple: a web app that visualizes your life in weeks. Each week is a box. Past weeks are dark, future ones are light. You choose your birthdate, and it instantly shows you how many weeks have passed, how many are left, and what percentage of your (approximate) 4000-week lifespan you've lived.

I used ChatGPT to help craft the initial prompt, iterate on mockups, and step in for debugging when Cursor got stuck in a loop. Most of the development happened in the Cursor IDE.

You can try it here: 4000.mauromorales.com. Code’s on GitHub.

Why I Start with a Prompt for the Prompt

In my experience, I get better results when I first ask ChatGPT to generate a prompt based on my own rough notes or ideas—rather than jumping straight into writing one myself. You might wonder: if I already know what I want, why not just write the full prompt directly?

For me, it’s a bit like brainstorming. I might have a clear goal in mind—or just a few scattered thoughts—and by handing those to ChatGPT, I get back a structured version of what I’m trying to say. Reading that output helps me spot gaps, clarify assumptions, and refine the ask. It’s like having someone else paraphrase your request so you can check: “Did you get what I meant?” That loop has helped me avoid misunderstandings and sharpen the initial direction before any code gets written.

Here’s the initial prompt I gave ChatGPT:

Help me write a prompt to build one single page app that has 4000 boxes each representing a week of a persons life. Every week that has passed will be painted in black or a dark color every coming week in white or a light color. Ideally all black and white. There is a date picker to select your birthdate, once elected it will automatically calculate how many weeks from the 4000 have passed and paint them, and how many are pending and keep them clear. A percentage is also displayed next to the row where the black and white boxes meet.

From there, we riffed on mockups (see image below) and iterated until it felt right. I asked ChatGPT to generate a prompt for Cursor (my AI coding assistant), restricting it to Tailwind only—no other dependencies. In hindsight, that constraint backfired: it kept defaulting to raw CSS, which didn’t play well with Tailwind’s utility-first mindset.

Coding with AI: What it was like

The first version worked—it looked close enough to the mockup. But it didn’t hold up on mobile, and dark/light mode was glitchy. I replaced the native date picker with one from Flowbite, which broke everything. The AI couldn’t fix it, so I had to step in and debug manually.

Other rough edges:

- The static app needed fingerprinting for CSS/JS assets to avoid caching issues. When I asked, the AI only did CSS. I had to request JS separately. It worked in the end—but required hand-holding.

- I requested a small feature to load a date via the URL: nailed on the first try.

- I asked for a randomizer mode (simulate life expectancy between 0 and 5000 weeks): almost worked, just needed tweaks.

All in all, it took a couple of hours from idea to delivery.

Pairing with AI isn’t like pairing with a person—and that matters

So far, I see AI like a pairing partner. It’s good at some things, but not at everything.

One big difference: it’s always confident. A human pair might say “I’m not sure,” or you’d pick up on hesitation in their tone or body language. AI doesn’t do that. I’d love if these tools could express uncertainty in their language—using phrases like “I think”, “maybe”, or “this tends to work” when the solution isn’t guaranteed.

Another loss: speed. The AI writes code instantly, which leaves no room for the natural pauses that happen when pairing with a human. You miss the chance to say: “What command did you use just now?”, “Why that approach?”, or “Oh, this reminds me of…” Those micro-reflections spark understanding, and sometimes, new ideas. Without them, the process becomes transactional—and I think that’s a missed opportunity.

Maybe it’s my fault for expecting it to feel like real pairing. Pairing isn’t just about building—it’s about learning together, spreading context, and sharing reasoning. Maybe I need a better analogy. And maybe finding that analogy will help me use AI more effectively.

What I learned

1. Clean code isn’t the goal anymore

I was talking to a friend at a big tech company, and we ended up discussing how clean code isn’t always a priority—especially at scale. In a monolith, you care about maintainability. You can’t just throw everything away each time you want to make a change. But with microservices, it’s different. They’re small, isolated, and often disposable. Sometimes, rewriting is cheaper than refactoring.

That’s where AI starts to shine. It’s often faster to generate a scoped, clean version of a feature than to surgically patch bloated legacy code.

This app wasn’t meant to be a microservice, but it’s small enough that the same principle applies. I haven’t read the full codebase—and honestly, I don’t feel the need to. Some decisions along the way already feel like prototype-quality work, not something I’d want to maintain long-term. And maybe that’s okay. If the cost of rewriting is near-zero, maybe “clean” doesn’t matter in the same way it used to.

2. Debugging feels like context-switching into someone else’s project

Even when I technically wrote the code, the AI rewrote pieces so often that I’d revisit "done" sections to make sure nothing silently broke. It felt like jumping into a fresh repo each time.

3. AI doesn’t validate its own work

It happily rewrote working code without checking whether the change actually functioned. For a simple app, I could play QA. But for anything more complex, this is risky—especially for people who don’t know what testing even looks like. At a minimum, AI tools should surface test coverage, suggest test cases, or provide validation options.

4. The right words matter

I wasted time with vague phrasing like “the styles aren’t updating.” When I got specific—e.g., asking how to fingerprint the style.css to avoid cache issues—the AI nailed it.

Lesson: technical vocabulary = better results.

5. Screenshots helped but were not enough.

Combining screenshots with text worked better than text alone, especially when describing visual bugs or layout issues. But layout was a challenge. Sometimes it wasn’t enough to describe what I wanted visually—even with screenshots. In some cases, I had to explicitly tell the AI: “Put this <div> inside that one, add this class, give it this attribute.” The specificity mattered. The vaguer I was, the more it hallucinated or improvised in the wrong direction.

6. It never pushed back

The AI treated my prompts as gospel—even when better options existed. It never suggested fingerprinting early, or warned that it preferred raw CSS over Tailwind. It didn’t offer alternatives, opinions, or guardrails. I want tools that challenge my assumptions, especially when it comes to things like security.

7. AI-generated Git commits: hit or miss

I experimented with letting AI write my Git commit messages. Sometimes they were great. Other times they just echoed obvious file changes, which I’d rather avoid.

8. Delegating front-end work felt great

I’m more of a back-end dev, so I was happy to hand off the UI. I still reviewed and tweaked things, but I didn’t get bogged down in unfamiliar territory. If tools like these keep improving, maybe we’ll all become full-stack by default—not because we master every layer, but because we can delegate what we don’t specialize in.

That said, deep expertise still matters. Especially for architecture, scaling, or debugging hard problems.

9. Abstractions raise the floor—but also the ceiling

Some worry that using AI makes your coding muscles weak. I get that. But it feels more like moving to a higher-level language. You still need to understand what’s happening under the hood to use it well. It’s not a shortcut past skill—it’s a shift in where skill gets applied.

Wrapping up

This project reminded me that good prompts matter—but so does good judgment. AI helped me ship something I might’ve abandoned otherwise. It’s not perfect, but it’s done. That’s a win.

If you’ve built something with AI—or you’re experimenting with it in your workflow—I’d love to hear how it went. I’m especially curious about how others are navigating the mix of delegation, debugging, and discovery. What did it help with—and where did it fall short?

Update: What if the app didn’t need to be an app?

After building and deploying the site, a funny thought hit me: this thing is so small—do I even need a web app?

So I asked ChatGPT to generate a GPT that is the app.

Functionality-wise, it nailed it on the first try. The visual output? Awful. No matter how much we tweaked it, the graphical layout never felt right. But then we landed on a workaround: a Python script that generates an ASCII representation of the graph. Surprisingly satisfying.

What makes this version more interesting is the interaction model. You don’t need to pick a date from a UI—you can just say “I’m 22 years old,” or “I was born in Ecuador in 1990,” or “I’m of Asian descent.” It adapts the calculation accordingly. You can also configure the GPT to only respond about the life-weeks concept and nothing else.

Is it perfect? No. But for 30 minutes of experimentation, I think it’s a success—and maybe even more accessible than the web version for some people.

You can try it here: Your Life in Weeks GPT